Whilst the technology surrounding Artificial Intelligance (AI) itself has encountered unprecedented growth, governments are still attempting to stay apace by striking a delicate balance between promoting innovation and ensuring accountablity. The same can be said for the Welsh government, specifically within the education sector.

AI is already available to us in the technology we use on a daily basis such as text to speech tools and translation tools. The huge rise in the access to AI tools, and generative AI in particular, has led to concerns about how this could be used appropriately within the education sector and the impact it has on learners.

To further understand the scope of generative AI use within schools, Estyn, the education and training inspectorare for Wales, has been tasked by the Welsh government to conduct a review.

Cabinet Secretary for Education Lynne Neagle has said: “Artificial Intelligence presents a huge potential for schools; the technology is evolving quickly, and it is vital that schools are supported to navigate change.” .

Are schools well-equipped to embrace AI?

The review is primarily motivated by the Welsh government's commitment to ensure that schools and public spaces are well-equipped to embrace new technologies like AI. It aims to prepare schools to address, understand and harness the opportunities the technology provides, whilst also developing strategies to mitigate any potential risks.

Estyn’s role will predominantly focus on the current use of generative AI and explore the potential benefits to schools, whilst also considering the challenges it poses. They will assist schools and further education colleges by developing proactive and practical guidance in understanding AI.

“Safe, ethical and responsible”

As schools and other settings are now able to use AI tools to support learning, it is essential to ensure all such use is safe, ethical and responsible. Estyn will also ensure all practitioners develop the skills and knowledge to use this technology in supporting learners to thrive.

The first phase towards this will include a survey for schools and pupil referral units asking for their views and experience, followed by more in depth engagement with teachers. The findings are expected to be published in summer 2025.

Owen Evans, His Majesty’s Chief Inspector at Estyn has said “…Having a clearer understanding of the integration of AI in schools at a national level will enable government to better support and guide the education community in the use of this powerful technology”

Whilst the focus is on generative AI, it is hoped that the review will be extended to include other types of AI, including predictive models which also pose significant risks.

The use of AI within Education

There are already various uses of AI within the public and education sectors, including uses such as biometric fingerprint and facial recognition, use for pupil attaiment prediction, assessing pupil IT activity to monitor for safeguarding concerns, as well as supporting activties such as pupil report writing, marking work and lesson planning.

The Welsh government’s recent published guidance on Generative artificial intelligence in education (10 January 2025) discusses how generative AI can offer further opportunities for the education sector when used correctly.

Current education tech is also being upgraded to include elements of AI functionality within them, allowing users to experiment without worrying about the risk of whether it fits into their organisational strategy and risk tolerance.

AI tools to support learners

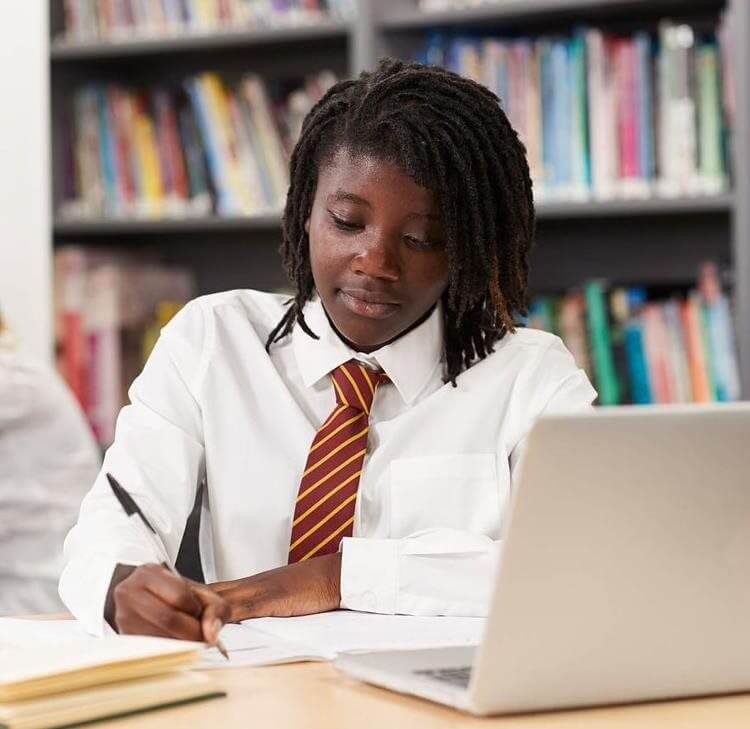

The use of AI tools, whether generative or other forms (such as predictive) in learning should be purposeful, as with all tools in the classroom. Its use should ultimately support learners to become ambitious, capable learners; enterprising, creative contributors; ethical, responsible citizens; and healthy, confident individuals.

The aim here is, when used responsibly, safely and purposefully, AI tools provided to schools and further education providers have the potential to reduce workload and to support with a variety of tasks such as:

- Supporting the development of content for use in learning.

- Assisting with some routine administrative functions.

- Helping provide more personalised learning experiences.

- Supporting lesson planning, marking, feedback and reporting.

- Providing contexts to support development of critical thinking skills.

- Supporting school-level curriculum and assessment design.

Challenges to consider

Although there are various reasons why AI would aid learning and support the sector, there is always an element of concern with the risks if AI is not understood and not used in the correct way.

- Data protection: AI systems often require access to large datasets, which may include personal data. Models can be trained on this personal data during the building phase, meaning that people may be unaware of and unable to access their rights in relation to this processing. Furthermore, there can be data protection risks when using personal data in the implementation of AI tools, including lack of transparency, risks in relation to automated processing and the potential for inputs to further train models.

- Security and safeguarding: AI systems can be vulnerable to hacking and other cyber threats. Malicious actors could potentially manipulate AI systems to behave unpredictably, by creating harmful outputs to access information that was used to create the model itself, or to provide content that is inappropriate for the emotional maturity or health of the user.

- Bias and discrimination: AI tools may reflect and amplify the biases and stereotypes that exist in the data upon which they are trained. As a result, some generative AI systems may produce content that can be offensive and harmful. Schools may wish to focus on learners’ development of critical thinking and digital literacy skills to support them to engage critically with the outputs of these tools. It is important that learners and practitioners pro-actively question and challenge the assumptions and possible implications of the content generated by AI systems.

- Ethical concerns: AI poses ethical questions, particularly around use where it affects vulnerable groups, such as students. This lack of transparency can make it difficult to understand how educational recommendations or decisions are made, raising questions about accountability. Engaging in open conversations about the possibilities and limitations of AI technologies should go hand-in-hand with discussing the importance of ethical and responsible use.

- Other regulatory challenges: The rapid development of AI technologies poses challenges for regulators trying to ensure that AI is used safely and ethically. Intellectual property rights are a particular concern when entering pupil work in AI marking/assessment tools, and these rights are at risk if their work is used to train the model. It is almost inevitable that laws and regulations may fail to keep apace with technological advancements. This is something that Estyn will need to consider its in review.

The Future of AI

Integrating AI tools into education presents many opportunities. However, their use must prioritise safety, responsibility, ethics, trust and inclusivity. Before integrating AI, schools should perform a thorough risk analysis, similar to the process used for other digital tools, software or services.

This is essential to evaluate the suitability of AI, identify potential risks and ensure a safe and secure learning environment. Schools should continue to prioritise learners’ safety, security and well-being when considering how best to respond and adapt to emerging technologies in education.

Learners and teachers should know when AI is used in creating content or assisting with tasks, ensuring everyone understands its role. This helps build trust and ensures that learners are aware of the strengths and limitations of AI, allowing them to use it responsibly and effectively.

You can find out more about Estyn’s Review of Generative AI here and we’ll provide an update on Estyn’s findings following completion of the review in Summer 2025.

Expert advice and support

We advise schools, trusts, colleges and universities on the legal and practical concerns of using AI in within the education environment, please get in touch to find out more. We also offer a support pack to equip schools with governance resources to safely implement AI.

Claire Archibald

Legal Director

claire.archibald@brownejacobson.com

+44 (0)330 045 1165

You may be interested in...

In Person Event

CST Data and Insights Conference 2025

Published Article

Cloud Computing guide 2024

Legal Update

Mitigating the impact of school data breaches

Legal Update

AI adoption in schools: Meeting ICO expectations

Legal Update

Reinvigorating data protection in schools

Legal Update

Navigating SARs in schools

Legal Update

Transformative leadership: In conversation with Lauren Thorpe

Legal Update

How should artificial intelligence be deployed in schools?

Legal Update

Browne Jacobson’s EdCon conference for school leaders returns for fifth year

Press Release

Browne Jacobson’s School Leaders Survey illustrates how teachers are adopting AI in the classroom

On-Demand

Handling parent complaints against staff: Panel discussion

Legal Update

EDPB guidelines: Processing personal data in the context of AI models

Legal Update

New ICO guidance on employment practices and data protection

Guide

School admission arrangements, applications and requests

Legal Update

How schools can avoid costly FOI mistakes

Legal Update

Estyn to review the use of AI within schools in Wales

Guide

Handling school FOI requests and the use of the section 36 exemption

Press Release

Education predictions: What does 2025 have in store for schools, trusts and universities?

Legal Update

Legal views on the Children’s Wellbeing and Schools Bill

On-Demand

Embracing AI in schools: Ensuring accountability, compliance and good governance

Press Release - Firm news

New hires at Browne Jacobson will support schools with data compliance amid enhanced ICO scrutiny

Legal Update - School leaders survey

School Leaders Survey Autumn 2024: The results are in

Legal Update

Five steps to turn the tide on Subject Access Requests

Legal Update

Silence persists on gender questioning guidance for schools

Legal Update

Attendance management checklist for schools

Legal Update

Interventions and penalties for school non-attendance

Legal Update

New attendance monitoring requirements for schools

Legal Update

Changes to attendance requirements for schools

Guide

Rebuilding trust and community: A guide for schools after the recent UK riots

Guide

School INSET days: preparing staff for the 24/25 academic year

Guide

Keeping Children Safe in Education (KCSiE) 2024: The main changes and what to do next

Legal Update

Understanding the ICO's new fining guidance

Opinion

School attendance matters

Legal Update

Cyber security and data breaches

Guide

FAQs - converting to academy status

Legal Update

Protecting children and their data in the online environment

Legal Update

Top three training topics 2022-23

As well as providing day-to-day support to help you focus on managing your settings, we also provide training and professional development on a range of topics to keep you and your staff up-to-date.

Press Release

Law firm picks up record breaking sixth Education Investor Award

Browne Jacobson’s education team has been named as winner of the ‘Legal Advisors to Education Institutions’ category at the Education Investor Awards 2022 for a record sixth time.

Press Release

Thousands take part in virtual careers event to help increase diversity in the legal profession

Over 3000 young people from across the UK and Ireland took part in a virtual legal careers insight event, aimed at making the legal profession more diverse.

Legal Update

Browne Jacobson’s market leading Education expertise recognised again in latest Legal 500 rankings

The new set of Legal 500 directory rankings have been published and we are proud to once again be recognised as one of the country’s leading firms advising the Education sector.